First, let's answer this question: GPU, FPGA, ASIC, and CPU all can be used for AI Devices, and GPU is the most common AI chip with the highest computing power and the most mature technology.

PCBONLINE, an OEM PCB manufacturer for AI electronic devices, provides turnkey PCB (printed circuit board) manufacturing and assembly with GPU, FPGA, ASIC, or CPU. If you want electronics manufacturing services for AI servers, network equipment, AI accelerators, etc, order turnkey PCB services from us.

GPU vs FPGA vs ASIC vs CPU Comparison

Before we introduce the GPU, FPGA, ASIC, and CPU one by one, let's first see their comparison table below.

|

Chip

|

Flexibility

|

Compute power

|

Power efficiency

|

Cost

|

Typical AI application

|

|

CPU

|

High

|

Low

|

Generally low

|

Low

|

Control, lightweight inference

|

|

GPU

|

Medium

|

High

|

Medium to high

|

High

|

Training, high-volume inference

|

|

FPGA

|

High

|

Medium

|

Medium to high

|

High

|

Edge real-time AI, low-latency applications

|

|

ASIC

|

Low

|

Highest

|

Highest

|

Very High

|

NPU, TPU, dedicated AI chips

|

And here are their differences in regards of the packages.

|

PCB copper thickness

|

Minimum pattern width/pattern interval

|

Minimum pattern width/pattern interval

|

Minimum pattern width/pattern interval

|

|

GPU

|

Large BGA (ball grid array)

|

1,000−6,000+

|

A100: 6,096 balls

|

|

FPGA

|

Large BGA / FCLGA (flip-chip LGA)

|

500−5,000+

|

Xilinx Virtex UltraScale+: 2,800−4,600

|

|

CPU

|

LGA (land grid array) / PGA (pin grid array)

|

1,000−6,000+

|

Xeon LGA4677 / EPYC: 6,096

|

|

ASIC

|

BGA / QFP (quad flat package) / Custom

|

64−5,000+

|

TPU: 4,000+ / NPU: 200

|

Next, let's see GPU, FPGA, ASIC, and CPU one by one in detail!

GPU (Graphics Processing Unit)

GPU is the most powerful general-purpose chip for parallel computing, serving as the mainstream core for AI server computational power. It is currently the workhorse for AI servers and high-performance AI computing, being the most commonly used, offering the highest compute power, and having the most mature ecosystem.

Packaging:

GPU uses a large BGA package with numerous pins, designed to support high I/O and large power draw (e.g., NVIDIA A100, RTX series).

Key features (hardware):

Large area, dense solder pads for high-density routing and high-current power delivery.

Power & cooling:

- High power consumption: Typical server GPUs range from 200W to 400W; consumer versions can be as low as 150W.

- Requires large heatsinks + fans / liquid cooling.

- Low thermal resistance: Direct contact between the PCB/heatsink and the GPU core.

Thermal design focus:

- GPU core temperature is usually controlled between 70℃ to 85℃.

- High-end server GPUs often utilize liquid cooling + heat pipes + thermal plates.

Key features (AI):

- Massive parallel processing, ideal for matrix operations (deep learning training/inference).

- Mature development ecosystem (CUDA, TensorRT).

Applications:

- AI data center training and inference

- AI PCs and edge AI gateways

- autonomous driving domain controllers

ASIC (Application-Specific Integrated Circuit)

ASIC is a chip custom-tailored for a specific AI algorithm. It is specialized and highly efficient but has low flexibility (e.g., TPU, NPU).

Packaging:

ASIC uses a medium to large BGA or FCLGA (Flip-Chip LGA). The size and pin count of 500 to 2000+ vary based on the chip's scale and complexity.

Power & cooling:

- Variable power consumption: Low-end units are in the tens of W; high-end data center TPUs can range from tens to hundreds of W.

- Edge ASICs often use small heatsinks or PCB heat dissipation; high-power ASICs require air or liquid cooling.

Thermal design focus:

- Edge device ASIC core temperatures are typically controlled below 70℃.

- High-end ASICs/TPUs require heatsinks + fans + thermal interface materials.

Key features (AI):

- Highest performance and energy efficiency (Tops/W), providing the most compute per unit of power.

- Long design cycle, high initial cost, and low flexibility.

Applications:

- Google TPU (specifically for AI inference/training)

- NPU (Neural Processing Unit) in AI cameras

- voice recognition chips in smart speakers and headphones

FPGA (Field Programmable Gate Array)

FPGA is a programmable logic device that sits between the general-purpose GPU and the specialized ASIC. It is programmable and flexible, used in scenarios requiring high real-time performance or where algorithms are frequently changing.

Packaging:

FPGA is a medium to large BGA (e.g., high-end Xilinx, Intel/Altera FPGAs). The size is smaller than a large server GPU but larger than low-power edge ASICs.

Power & cooling:

- Variable power consumption: Low-end units are in the tens of W; high-end FPGAs can range from tens to 200W.

- Often uses air-cooled heatsinks; high-end accelerator cards may add liquid cooling.

Key features (AI):

- Strong flexibility, allowing rapid iteration of AI algorithms at the hardware level.

- Low latency, suitable for applications with stringent real-time requirements.

- Less power-efficient than an ASIC and harder to develop for than a GPU.

Applications:

- Edge AI acceleration cards

- AI inference in communication base stations

- industrial inspection equipment

CPU (Central Processing Unit)

CPU is a general-purpose computing core, primarily responsible for scheduling and control. Its pure compute power isn't outstanding for AI, but it is essential for control, data handling, and lightweight AI tasks at the edge.

Packaging:

CPU uses LGA (land grid array) or PGA (pin grid array) (e.g., Intel server CPU uses LGA; AMD desktop CPU uses PGA). Server CPUs have numerous contacts to support multi-core architectures.

Power & cooling:

- Power consumption ranges from tens of W (mobile) to 150W to 350W (server).

- Requires a heatsink + fan or liquid cooling.

- High-end CPUs require active cooling + heat pipes/liquid cooling.

Thermal design focus:

- Server CPU core temperature is controlled between 60℃ to 85℃.

- Air-cooled heatsink design and airflow management are critical.

Key features (AI):

- Unsuitable for large-scale AI training (insufficient compute power), but indispensable for system operation.

- Primarily handles control, data movement, and coordination with other accelerators.

Applications:

- AI servers: The CPU handles data pre-processing and task scheduling, while the GPU/ASIC executes core computation.

- Edge devices: Lightweight AI inference (e.g., ARM CPU + small models).

- Mobile devices: ARM CPU working in tandem with an NPU/DSP.

Partner with PCBONLINE for Turnkey PCBs for AI Devices

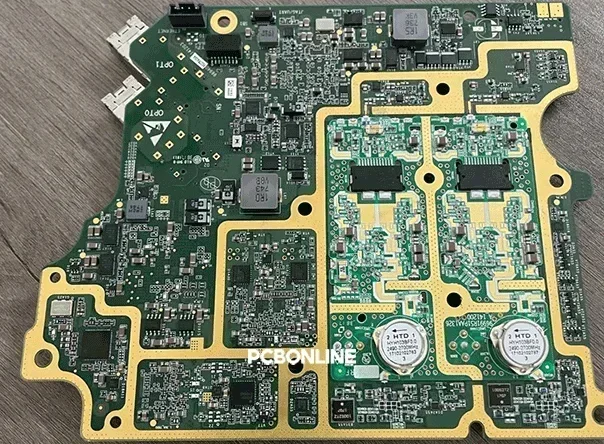

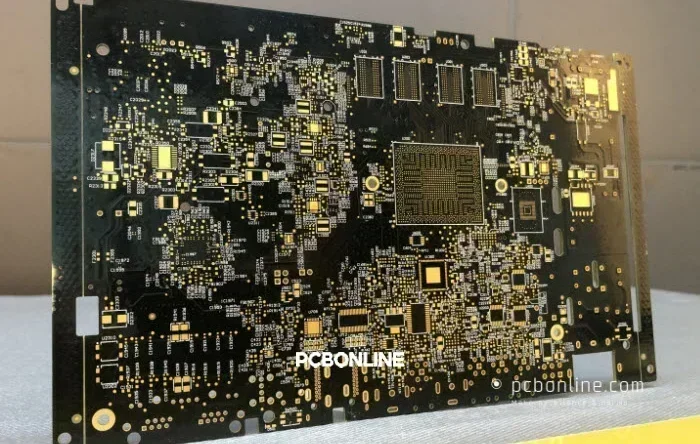

If you are designing any AI servers, network equipment, accelerators, etc, work with PCBONLINE for turnkey OEM PCB service. We provide AI device PCB manufacturing services that cover GPU/FPGA/ASIC/CPU PCB manufacturing, assembly, testing, and reliability assurance.

Founded in 2005, PBONLINE has two large advanced PCB manufacturing bases and one PCB assembly factory.

AI server PCB fabrication with HDI via-in-pad copper filling for GPU designs with 24+ layers.

We provide one-stop HDI PCB manufacturing, including component sourcing, PCB fabrication, assembly, testing, and system integration using any GPU, FPGA, ASIC, or CPU.

SMT assembly with fine-pitch BGA placement accuracy and custom reflow profiles for warpage-free soldering.

100% X-Ray inspection, functional testing, and burn-in testing for guaranteed reliability.

We have rich experience in impedance control, oven temperature control, and assembly fixture designs.

Supporting IC programming in the Cloud way, manipulated by you, and protecting your intellectual property.

High-quality PCBA manufacturing certified with ISO 9001:2015, ISO 14001:2015, IATF 16949:2016, RoHS, REACH, UL, and IPC-A-610 Class 2/3.

At PCBONLINE, we can help our clients reduce costs while achieving the highest levels of performance and reliability. If you feel interested in AI server PCBs from PCBONLINE, send your inquiry by email to info@pcbonline.com.

Conclusion

GPU, FPGA, ASIC, and CPU are all used for AI applications, while GPU is most used because of its high compute power and specializing in parallel computing. PCBs for AI, especially AI servers, are 24+-layer HDI PCBs powering GPUs for deep learning and high-performance computing. China supplies over 70% of AI server PCBs, with PCBONLINE standing out as a reliable turnkey AI PCB manufacturer. If you are looking for a trusted partner for AI device PCBs, contact PCBONLINE for turnkey OEM manufacturing.

PCB assembly at PCBONLINE.pdf